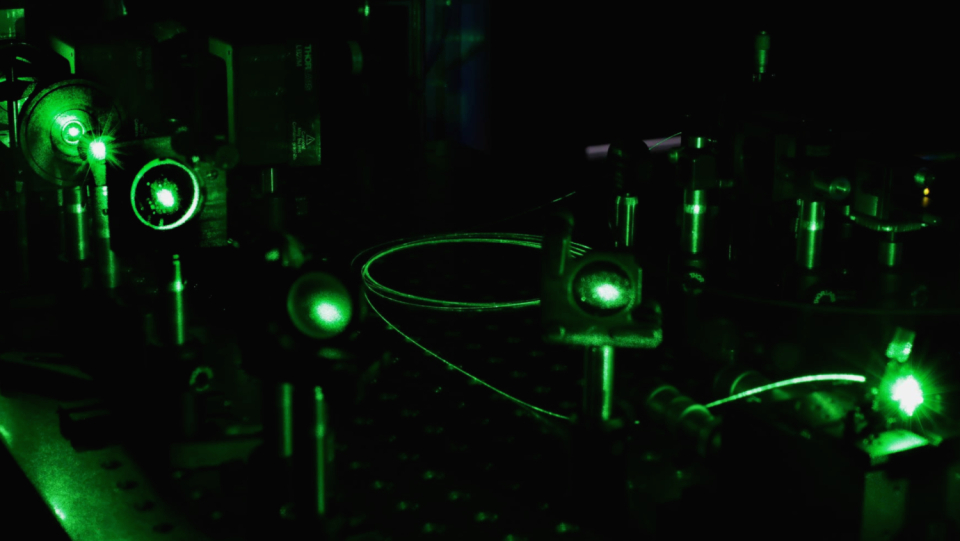

Light has long been established in data transmission. Negligible energy losses and the possibility of sending information in parallel – namely in the form of pulses of different colors – through a single optical fiber leave the old copper cables no chance. For a Swiss research team, however, the fibers are more than just data channels. Instead, they want to use the fiber optics to process the data themselves.

The complex interactions of the light make it possible with the material of the glass fibers. If you skillfully put it on, you can change the processed data exactly as a computer chip would otherwise have to do. The research group presented how this works in August last year in the journal "Nature Computational Science". Your system called Solo (Scalable Optical Learning Operator) belongs in the field of artificial intelligence (AI). And although it is still basic research, Solo already managed to estimate the age of the depicted on portrait photos or to recognize covid 19 diseases on X-ray images of the lungs-with a fraction of the energy otherwise required for this.

An ordinary computer chip has the advantage that it can be used universally. It can carry out very different processes, depending on what it is programmed for. However, if a lot of data has to be processed in the same way, this becomes a disadvantage – the processor processes the individual processes one after the other, which costs time compared to a system that processes them in parallel. Especially in AI, this problem arises. The artificial neural networks, especially the "deep" networks of so-called deep learning, have shown astonishing performance in recent years. They are usually the ones behind the advances in image or speech recognition, making it possible for more and more cars to drive independently in more and more situations. But they also bring conventional hardware to its knees, given the sheer volume of data that needs to be processed, especially during the learning process.

For example, if one and the same mathematical function has to be applied millions of times in a row, normal processors become the eye of the needle, but optical systems play out their strengths especially in this respect. Because just as different-colored light pulses transmit data in parallel in the same optical fiber, optical circuits can also perform several calculations simultaneously. The fact that such optical circuits can now be converted into compact chips does the rest to motivate start-ups all over the world to build the first commercial products.

The fiber optic material takes over the arithmetic work

However, the Swiss research team led by Christophe Moser and Demetri Psaltis from the École Polytechnique Fédérale de Lausanne is taking a different, rather unusual approach with its work. While current approaches for optical computers are mostly based on displaying concrete numerical values with the intensity of light pulses and changing them as skillfully and, above all, specifically as possible, the Swiss use the material properties of glass fiber to manipulate the signal. In a way that is largely unclear in detail – and yet it works. "This is similar to the depths of artificial neural networks," says Moser. "You often have to accept that you can't exactly understand the interaction of the millions of neurons."

In a classic test case for AI applications, the system is to decide whether a dog or a cat is depicted in an image. The experimental setup of the Lausanne research group is quite manageable: a laser illuminates a passive screen, which generates the image from the incident light on 600 by 600 pixels, which is ultimately to be classified. Instead of directing each of these pixels individually into a deep neural network, as is usually the case in machine learning, a lens bundles the entire image and couples it into the optical fiber. The different areas of the picture are mixed up in their interior. At the other end, the emerging light is captured by a camera.

Only the image of this camera is then fed into a conventional but very simple neuronal network, in which each individual image pixel is represented by their own neuron. Each of these neurons, in turn, is connected to the two spending neurons "dog" and "cat". During training, the network is shown the images changed by the fiber optic and learns to recognize which originally showed a dog and which a cat. It adapts the strength of the connections between the pixel neurons and the expenditure neurons until the "dog" neuron is activated in a dog image and the neuron "cat" in the image of a cat.

If you would give this simple network the dog and cat pictures just like that, i.e. unprocessed for training, it would have no chance to learn the difference between the animals. Therefore, in conventional neural networks, a large number of intermediate layers with neurons are inserted between the input pixels of the original image and the output neurons. This results in the many millions of parameters that have to be optimized during training and all have to be run through later application. In the new model, on the other hand, the fiber takes over the role of these deep layers. "We only need about one hundredth of the energy of a conventional neural network," says Moser. "However, we are still a few percent worse in terms of the reliability of the classification. That doesn't sound like much, but it's a problem we still have to solve."

Nonlinear effects enable it.

But what happens to the picture while it runs through the fiber? And how does it come with this quite simple trick that can be more or less replaced in the millions of parameters of a neuronal network optimized in a complex training process? The answer are non -linear effects. They are also an indispensable ingredient in conventional neuronal networks. Nonlinear is basically everything that cannot be represented mathematically by a straight line, i.e. beyond addition or simple multiplication with a constant value. In the artificial neuronal networks, non -linear functions appear as a kind of guard who decides in each individual neuron whether the incoming signals are passed on to the subsequent nodes or not. Nonlinear "activation functions" ensure that the output of the neuron is not simply proportional to the input signals, but accepts certain high values, even if the threshold is only easily exceeded for activation.

"These nonlinear effects are crucial for a network to learn," explains Moser. However, they are usually difficult to replicate on optical chips. "To create something like this in optics, you usually need very high energies." However, since his group confines the light in the glass fiber, so to speak, they were able to achieve the effects there with low light intensity and thus also with low energy losses. "The fiber completely mixes the information from the pixels about every half millimeter," says Moser. "This is comparable to the process in a single layer of a deep neural network." With a length of five meters, the current structure corresponds to an extremely deep network.

The researchers themselves are still puzzling why the result captured by the camera at the output of the fiber and with a single further neuronal layer. In any case, there is indications that the transformation is not yet optimal. The performance depends, for example, on the exact shape of the glass fiber. So it changes when it is bent or wrapped differently. Although this offers the possibility of further optimizing by trying it out, at the same time this instability is also a problem. »If a commercial product is ever to be created, the system must be stable enough to continue reliably for months and years after training to deliver, ”says Moser. The currently loose fiber optic would have to be integrated into a chip. According to Moser, it is already possible today to write a meter long light guide into the surface of a silicon chip - in the form of a span of just one square centimeter.

Progress is being made with even more "ordinary" optical chips.

"Optical arithmetic is particularly promising if you make it integrated on chips and relate to elements that you already have well under control," says Cornelia Denz, who heads the working group for non -linear photonics at the Institute for Applied Physics at the University of Münster. Last year an overview article in "Nature" on the subject of optical arithmetic and artificial intelligence also also made up. Such systems integrated on silicon chips are already very advanced and are partly manufactured with the same methods that are also used in the production of conventional electronics chips - only that instead of transistors, light ladder, radiation divider and other optical elements are written in silicon . The advantages of optical arithmetic could be packed together like low losses, high clock rates and the possibility of parallel data processing in a promising way. "That is why it can be assumed that this will soon be transferred to commercial products," says Denz. “In the next five to ten years you will probably hear more about it. The current start-ups also show that this is possible. "

The chips of the two US start-ups Lightmatter and Lightelligence, for example, appear to be particularly advanced. They are based on the same scientific paper published in 2017 by researchers at the Massachusetts Institute of Technology. The two main authors are now competitors in an exciting race for the new technology. From a purely financial point of view, Lightmatter has taken the lead in 2021 with an additional cash injection of 80 million dollars from GV (formerly Google Ventures) and Hewlett Packard, among others. But competitor Lightelligence can also point to funds totaling 100 million dollars. According to their own statements, both start-ups are on the verge of launching their respective products on the market.

Compared to Solo, LIGHTELTIENCE and LIGHTMACTE are relatively close to the electronic role models when it comes to the architecture of their optical chips, only that they use so-called Mach-Zehnder interferometers as elementary switching elements.

These optical components split a light beam at their input in two parts and bring them together again at the exit.

Since the speed at which the light spreads along the two different ways can be changed in a targeted manner, there is a delay in reunification of the rays.

In this way, the positions of wave mountains and corrugated valleys can be moved against each other, which means that they interfere with either constructive or destructive when the overlap below.

The component is essentially a modulator that changes the intensity of a light beam.

"With a network of MACHTENDER-ONTERFEROMERT, any mathematical operation can basically be realized," explains Rolf Drechsler, who heads the research area Cyber-Physical Systems at the German Research Center for Artificial Intelligence and the Working Group for computer architecture at the University of Bremen. In recent years, the researcher and his team have developed instructions on how to interpret optical components for a wide variety of purposes. They have relied on the tiny interferometers because they work best according to the current state of the art and are therefore the most promising for future applications. "Basically, it would even be possible to produce a fully functional, optical computer," says Drechsler. Much could not yet be technically implemented in the desired robustness and quality. In addition, a technical application must also be compact and inexpensive in order to be able to use it commercially.

The final proof that optics can prevail against silicon electronics, which have been optimized over decades, at least in special AI applications, has yet to be provided by current start-ups. But the time seems ripe to give artificial intelligence new impetus with a bit of optics.